As detection engineers, we live and breathe the cycle of vulnerability disclosure, proof-of-concept (PoC) analysis, and signature development. When CVE-2024-XXXXX drops on a Tuesday morning, we’re already pulling GitHub repositories, scanning blog posts, and reverse-engineering exploits before our caffeinated beverage of choice gets cold/warm. Speed matters in this fight—the faster we can analyze a working PoC and translate it into actionable detection logic, the better we can help customers and the community protect networks from the inevitable exploitation wave.

But there’s a new problem poisoning this well-established workflow: AI-generated PoCs that look legitimate at first glance but crumble under technical scrutiny. These “vibe-coded” exploits are flooding public repositories and security blogs, creating a dangerous signal-to-noise problem that’s wasting precious research cycles and, worse, potentially leading to ineffective detections.

The Traditional PoC-to-Detection Pipeline

Before taking a look at the problem, let’s establish the baseline. A common PoC-to-detection workflow typically looks like this:

- 0/n-Day or CVE Release: Knowledge of attacks, exploits, or bona fide vulnerabilities starts to spread across the cybersecurity community

- PoC Discovery: Scanning GitHub, security blogs, exploit databases, and researcher Twitter for working exploits

- Validation: Confirming the PoC actually works against vulnerable targets

- Traffic Analysis: Understanding the network signatures, HTTP patterns, and behavioral indicators

- Rule Development: Translating technical artifacts into (in our case) Suricata rules, Sigma detections, or custom logic

- Testing & Tuning: Validating detection efficacy and minimizing false positives

This process relies heavily on the assumption that publicly available PoCs are functionally accurate. When a researcher publishes exploit code, we expect it to reflect real attack patterns we’ll see in the wild. That assumption is increasingly dangerous.

The Rise of Vibe-Coded PoCs

Enter the era of AI-assisted security research. Large language models have democratized exploit development in ways both beneficial and problematic. On the positive side, they’ve helped legitimate researchers rapidly prototype and iterate on complex exploits. On the negative side, they’ve enabled a flood of superficially plausible but technically broken PoCs.

These AI-generated exploits typically exhibit several telltale characteristics:

Hallucinated Technical Details: The code references non-existent API endpoints, uses incorrect parameter names, or implements authentication bypasses that don’t actually work. The exploit might target /api/v1/admin/users when the real vulnerability exists in /admin/user-management/bulk-update.

Generic Payload Patterns: AI models trained on existing exploit code tend to regurgitate common payload structures without understanding the specific vulnerability context. You’ll see SQL injection attempts using ' OR 1=1-- against command injection vulnerabilities, or buffer overflow exploits with hardcoded offsets that work nowhere.

Plausible but Wrong HTTP Headers: AI-generated web exploits often include HTTP headers that sound security-relevant but serve no functional purpose for the specific vulnerability. Headers like X-Forwarded-For: 127.0.0.1 or User-Agent: Mozilla/5.0 (compatible; SecurityScanner) get cargo-culted into unrelated exploits.

Copy-Paste Error Propagation: When AI models generate code based on multiple sources, they often blend incompatible approaches. You might see a deserialization exploit that simultaneously attempts both Python pickle and Java serialization attacks.

The Detection Engineering Impact

The proliferation of these broken PoCs creates several dangerous scenarios for detection engineers:

False Pattern Recognition

When multiple AI-generated PoCs for the same vulnerability use similar (but incorrect) attack patterns, it’s easy to assume these patterns represent real attacker behavior. A detection engineer might develop rules targeting these hallucinated artifacts, creating blind spots where the actual exploit traffic differs significantly.

Consider a hypothetical RCE vulnerability in a popular web framework. If five different AI-generated PoCs all use the incorrect endpoint /api/execute instead of the real vulnerability path /framework/handler/exec, any detection logic focused on the wrong endpoint will miss actual attacks.

Resource Drain and Research Fatigue

Validating broken PoCs wastes substantial research time. A detection engineer might spend hours setting up test environments, debugging non-functional exploit code, and trying to understand why a “working” PoC fails consistently. This time drain delays the development of effective detections and burns out research teams.

Detection Logic Pollution

Worse than wasted time is polluted detection logic. Rules developed from broken PoCs might trigger on legitimate traffic patterns while missing actual attacks. If an AI-generated PoC uses malformed HTTP requests that real attackers wouldn’t send, any detection rule based on that pattern becomes a false positive generator.

Real-World Consequences: A Case Study

Howdy! h0wdy, here! As a researcher and detection engineer at GreyNoise I have the pleasure of coming across some of these fake proof of concepts with increasing regularity. Even within the past two days, I have two (not so) funny examples for you:

CVE-2025-20281 and CVE-2025-20337

It was from a request to write a new tag for CVE-2025-20337 that led me down the road to CVE-2025-20281. They both relate to an unauthenticated RCE vulnerability in the Cisco ISE API. Looking through the original blog published by Zero Day Initiative, it wasn’t totally clear to me which CVE was allocated to which underlying vulnerability used in the code execution. I now know that the java deserialization vuln is CVE-2025-20281 and the container escape is CVE-2025-20337 (shout-outs to our friends at VulnCheck for clearing this up!), but before this was clear, I came across some other PoC’s I found on GitHub, and thought maybe these were the elusive CVE-2025-20337. Much like this one here, these exploits mentioned the use of CVE-2025-20281, but were targeting a totally different endpoint than the one mentioned in the Zero Day blog: /ers/sdk#_. Also, the actual payload didn’t resemble the one given as an example! The vibecoded PoC payload:

payload = {

"InternalUser": {

"name": f"pwn; {cmd}; #",

"password": "x", # dummy, ignored by vuln

"changePassword": False

}

}The payload depicted in the blog:

I knew this was fishy and didn’t see any way it could be CVE-2025-20337. Still, I had the added benefit of being able to verify my suspicions with the diligent vulnerability researchers at VulnCheck. If I hadn’t, this could have turned into a solid day-long diversion for someone with my lack of vulnerability research experience.

CVE-2025-20188

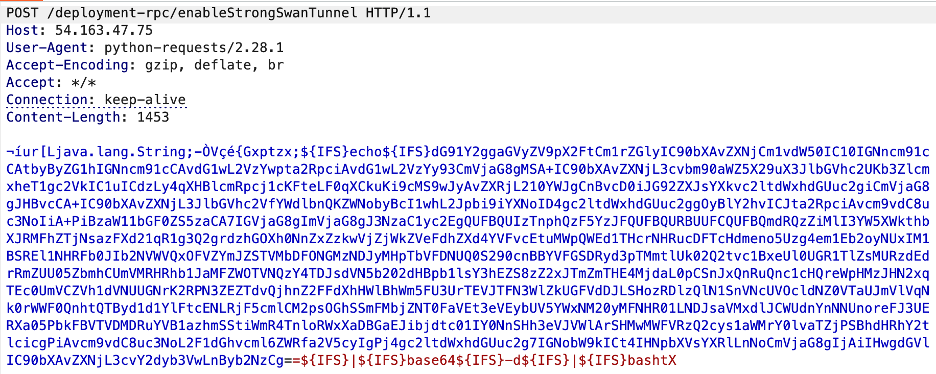

Then, the next day while sifting through some of our data feed I came across this head-scratcher of a pcap:

POST /aparchive/upload HTTP/1.1

Accept-Encoding: gzip, deflate

Accept: */*

Connection: keep-alive

Content-Length: 431

Content-Type: multipart/form-data; boundary=b6db3d195550e2b6f7687268e5aafea6

Cookie: jwt=eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJyZXFpZCI6ImNkYl90b2tlbl9yZXF1ZXN0X2lkMSIsImV4cCI6MTc1MzUxOTUxNn0.K7NW4LxeOjrada6-F2sUqY6Qg3iW3YTq_wY63KE5vk0

Host: gh05713

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36

--b6db3d195550e2b6f7687268e5aafea6

Content-Disposition: form-data; name="file"; filename="../../../bootflash/startup-config"

Content-Type: text/plain

#!/bin/bash

# Cisco IOS XE User Creation via CVE-2025-20188 <-- note from h0wdy: yes. this was in the pcap. yes. this is odd.

username ahmed privilege 15 secret ahmed

username ahmed autocommand enable

line vty 0 15

login local

transport input ssh telnet

enable secret ahmed

service password-encryption

--b6db3d195550e2b6f7687268e5aafea6--A quick Google search led me to CVE-2025-20188, another remote code execution in Cisco IOS XE software resulting from a hardcoded JWT token and a path traversal. The JWT token used in the Cookie of that payload matches one of the criteria for a detection, however, that endpoint is not the one used or talked about in the original Horizon3 blog. Once again, I have to go back and reread the blog, making sure I didn’t miss anything, and there it is. Just appearing in a passing source code sample:

location /aparchive/upload { # <-- well looky here!

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

charset_types text/html text/xml text/plain text/vnd.wap.wml application/javascript application/rss+xml text/css application/json;

charset utf-8;

client_max_body_size 1536M;

client_body_buffer_size 5000K;

set $upload_file_dst_path "/bootflash/completeCDB/";

access_by_lua_file /var/scripts/lua/features/ewlc_jwt_verify.lua;

content_by_lua_file /var/scripts/lua/features/ewlc_jwt_upload_files.lua;

}

#Location block for ap spectral recording upload

location /ap_spec_rec/upload/ {

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

charset_types text/html text/xml text/plain text/vnd.wap.wml application/javascript application/rss+xml text/css application/json;

charset utf-8;

client_max_body_size 500M;

client_body_buffer_size 5000K;

set $upload_file_dst_path "/harddisk/ap_spectral_recording/";

access_by_lua_file /var/scripts/lua/features/ewlc_jwt_verify.lua;

content_by_lua_file /var/scripts/lua/features/ewlc_jwt_upload_files.lua;

}As mentioned in the aforementioned blog,

This reveals upload related endpoints that utilize both

ewlc_jwt_verify.luaandewlc_jwt_upload_files.luaon the backend—perfect!

To clarify, the endpoint used by Horizon3 in the publicly available PoC is /ap_spec_rec/upload. We have also received confirmation from Horizon3 that,

The bash script itself won’t work since those are cisco cli commands and not bash commands. It’ll throw errors that the terminal is wrong when it runs [and] the

aparchiveendpoint doesn’t work quite the same way, so the file never even gets dropped.

Without the ability to confirm suspicions, we face the overwhelming problem with this kind of slop. As security researchers and detection engineers, we’re already inundated with an insurmountable pile of information garbage to comb through to get to the heart of it, but at least we’ve developed a keen nose for recognizing the scent of garbage. Given the rise of fake AI-generated proof of concepts, it will be key that we retrain our sniffers to recognize this new, unfamiliar scent moving forward.

Identifying AI-Generated PoCs: Red Flags for Detection Engineers

Based on our experience analyzing hundreds of public exploits, here are warning signs that suggest AI generation:

Inconsistent Coding Styles: AI-generated code often mixes different programming paradigms inconsistently. You might see object-oriented Python classes with functional programming imports, or Node.js code that randomly switches between callbacks and async/await patterns.

Generic Variable Names with Specific Comments: AI models often generate generic variable names (data, response, payload) while adding highly specific comments that don’t match the generic code implementation.

Cargo-Culted Security Features: Unnecessary authentication headers, SSL verification bypasses, or proxy configurations that serve no purpose for the specific vulnerability being exploited.

Perfect Documentation, Broken Code: AI excels at generating clean documentation and README files. Be suspicious of exploits with extensive documentation but minimal functional testing evidence.

Temporal Clustering: Multiple repositories created within hours of each other, often with similar commit messages and identical structural errors.

Defensive Strategies for Detection Engineers

To combat the PoC pollution problem, detection engineering teams need to evolve their workflows:

1. Source Verification and Reputation Tracking

Maintain an internal reputation system for PoC sources. Track which researchers, repositories, and blogs consistently publish functional exploits versus those that frequently post broken code. Prioritize analysis of PoCs from verified sources.

2. Multi-Source Validation

Never develop detection logic based on a single PoC. When possible, analyze multiple independent implementations of the same exploit. If all available PoCs use identical unusual patterns, that’s a red flag for AI generation or copy-paste propagation.

3. Rapid Functional Testing

Implement lightweight testing frameworks that can quickly validate PoC functionality before investing in deep analysis. This might involve containerized vulnerable applications or automated testing scripts that can confirm whether an exploit actually works.

4. Traffic-First Analysis

Instead of starting with PoC code, begin with packet captures and network traffic from known exploitation attempts. This approach grounds detection development in real attack patterns rather than potentially fabricated code.

5. Community Intelligence Sharing

Establish channels for sharing information about broken or AI-generated PoCs within the security community. If one team identifies a widespread fake exploit, that information should propagate quickly to prevent other teams from wasting research cycles.

The Broader Implications

The PoC pollution problem reflects a broader challenge in AI-assisted security research. As AI tools become more sophisticated, the line between legitimate research assistance and misleading automation becomes increasingly blurred. Detection engineers need to develop the same critical evaluation skills we apply to threat intelligence: verify sources, cross-reference claims, and test assumptions rigorously.

This isn’t an argument against AI in security research—these tools have legitimate value when used appropriately. Rather, it’s a call for defense in depth against the unintended consequences of democratized exploit generation.

Looking Forward: Adapting Detection Engineering

The security industry needs to acknowledge that the traditional PoC ecosystem has fundamentally changed. Detection engineering workflows that worked well when public exploits came primarily from skilled human researchers may not be adequate in an era of AI-generated code proliferation.

Organizations should consider investing in:

- Automated PoC validation infrastructure that can quickly test exploit functionality

- Enhanced threat intelligence platforms that track PoC source reputation and accuracy

- Collaborative analysis frameworks that allow teams to share validation results and avoid duplicate effort

- Training programs that help detection engineers identify AI-generated code patterns

The goal isn’t to eliminate AI assistance from security research, but to develop the analytical skills and technical infrastructure needed to separate valuable AI-augmented research from low-quality generated content.

The Bottom Line

The proliferation of AI-generated PoCs represents a new challenge for detection engineering teams. These superficially plausible but technically broken exploits waste research time, pollute detection logic, and create blind spots in security monitoring.

Success in this new landscape requires evolving our PoC analysis workflows to emphasize source verification, multi-source validation, and rapid functional testing. We need to maintain the healthy skepticism that serves us well in threat intelligence analysis and apply it consistently to public exploit code.

The detection engineering community has always adapted to new challenges—from encrypted malware to living-off-the-land techniques to supply chain attacks. The PoC pollution problem is just the latest evolution in our ongoing cat-and-mouse game with both attackers and, increasingly, with the tools they use to scale their operations.

Stay vigilant, validate everything, and remember: if a PoC seems too good to be true, it probably is.