def read_pcap(pcap_file = "./input.pcap"):

global decoded_packets

decoded_packets = []

sniff(offline=pcap_file, session=TCPSession, prn=packet_handler)

return decoded_packets

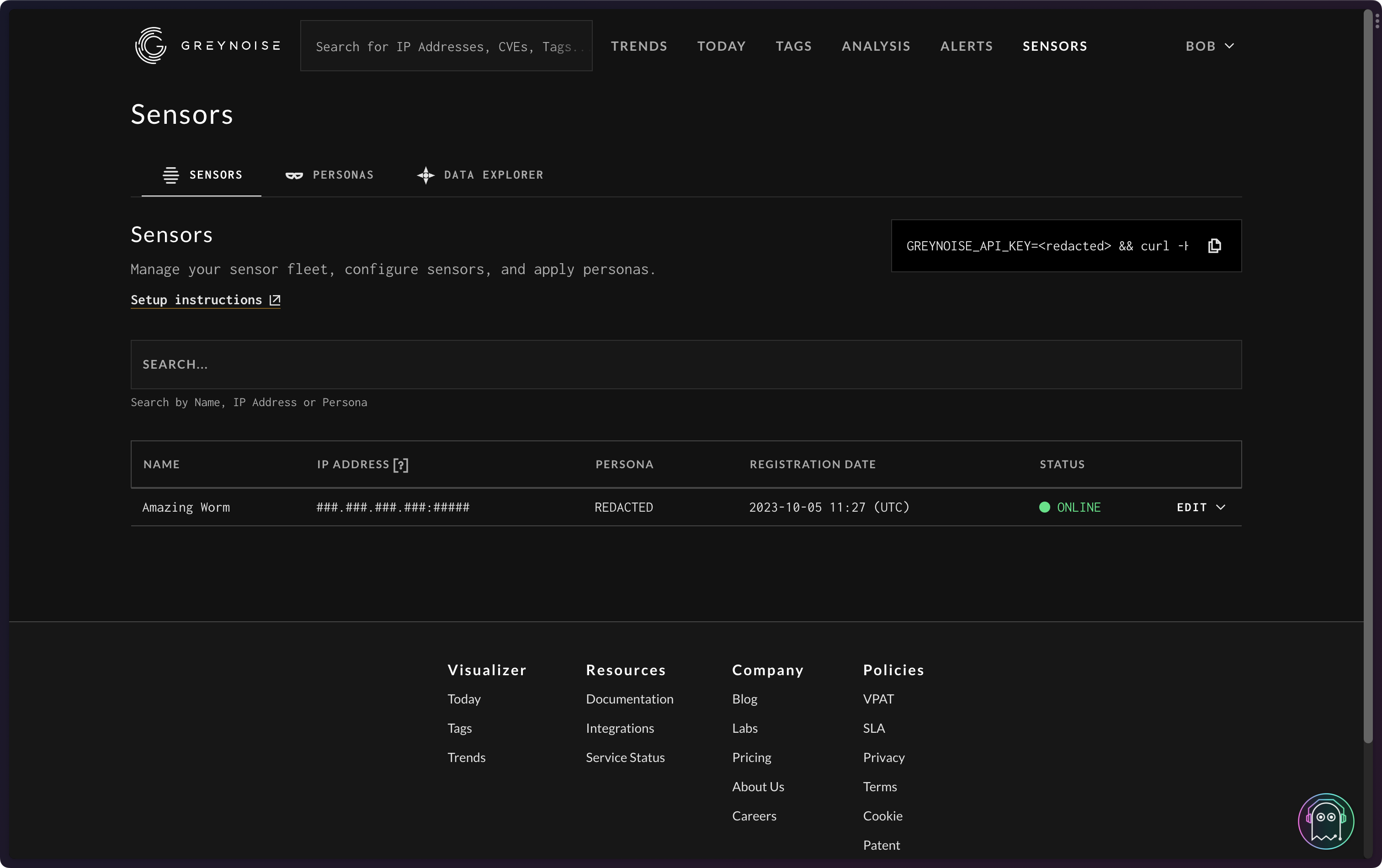

Our new hosted sensor fleet is cranking out PCAPs for those lucky folks who made it into the first round of our Early Access Program. They enable you to give up a precious, internet-facing IPv4 address and have it automgically wired up to your choice of persona. These personas can be anything from a Cisco device, to a camera, and anything in between.

While there’s a fancy “PCAP analyzer” feature “coming soon” to the GN Visualizer and API, I’ve been mostly using a sensor that’s tucked away in a fairly quiescent part of the internet to quickly triage HTTP requests to see if we can bulk up our Tag (i.e., an attack/activity detection rule) corpus with things we may have missed in the sea of traffic we collect, tag, and triage every day.

Sure, Sift helps quite a bit with identifying truly horrific things, but sometimes a quick human pass at HTTP paths, headers, and POST bodies will either identify something we may have previously missed, or cause us to think a bit differently and start identifying more of the noise. This is how our recent “security.txt scanner 🏷️” and robots.txt scanner 🏷️ were birthed.

Folks who know me well grok that I am not a big fan of Python. However, despite said aversion, I am a big fan of Scapy, which is a Python module that empowers us with the ability to send, sniff, dissect, and forge network packets. There is zero point in reinventing this most capable wheel, and we can make it play well with a langauage I 💙 and respect: R. Which is what we’re going to do today.

{reticulate} To The Rescue

There is, unfortunately, no escaping Python if you’re doing modern data science work. Sure, some folks and teams may be able to get away with a pure R workflow. But, between all the fast-paced “AI” creations being “Python-first”, and the need to interoperate across organizations, it’s important to be able to use it when needed, then use a real language to Get Real Stuff Done™.

Enter the reticulate R package.

A big strength of R is being able to integrate other langauges/runtimes into it via custom packages. The reticulate package does this for Python. You can read the aforementioned URL for all the details, but we’re going to use this superpower to have Scapy do the PCAP filtering and packet dissecting to send the results to R where proper analysis work can be done.

Dissecting PCAPs

What I wanted to be able to do was to:

- Read in a PCAP file

- Filter for only HTTP request packets

- Extract the:

- source IP

- HTTP

method,path, andversion - HTTP headers

- any

POSTbody that came along for the ride

- Then return a list of those back to R

For quick triage purposes, I don’t care about nesting the headers away in a complex map column, I just want everything available to process at-will.

We’re going to define a read_pcap() function in Python that we’ll eventually be called from R. It is small enough that it doesn’t really need any explanation:

The packet_handler() function does all the work of identifying HTTP packets and extracting the data I want. Scapy includes heuristics to identify HTTP traffic, so we’re going to lean on that vs. write our own.

The filtering going on here is done on the “data” part of this packet diagram:

def packet_handler(packet):

if packet.haslayer(HTTPRequest):

http_packet = packet[HTTPRequest]

method = http_packet.Method.decode()

fields = {}

fields["ip"] = packet[IP].src

for key in http_packet.fields:

try:

fields[key] = http_packet.fields[key].decode('utf-8')

except:

for ukey in http_packet.fields[key]:

fields[ukey.decode('utf-8')] = http_packet.fields[key][ukey].decode('utf-8')

if packet.haslayer(Raw) and method == "POST":

fields["POST_Payload"] = base64.b64encode(packet[Raw].load).decode('utf-8')

decoded_packets.append(fields)Purists should feel encouraged to sub out a less icky if for my hacky try/except.

Here’s how that whole thing looks inside of R (with the missing import statments added in):

library(reticulate)

hcap <- py_run_string(r"(

import base64

import logging

# tell scapy to be quiet

logging.getLogger("scapy.runtime").setLevel(logging.ERROR)

from scapy.all import *

from scapy.layers.inet import TCP

from scapy.layers.inet import IP

from scapy.layers.http import HTTPRequest

def packet_handler(packet):

if packet.haslayer(HTTPRequest):

http_packet = packet[HTTPRequest]

method = http_packet.Method.decode()

fields = {}

fields["ip"] = packet[IP].src

for key in http_packet.fields:

try:

fields[key] = http_packet.fields[key].decode('utf-8')

except:

for ukey in http_packet.fields[key]:

fields[ukey.decode('utf-8')] = http_packet.fields[key][ukey].decode('utf-8')

if packet.haslayer(Raw) and method == "POST":

fields["POST_Payload"] = base64.b64encode(packet[Raw].load).decode('utf-8')

decoded_packets.append(fields)

def read_pcap(pcap_file = "./input.pcap"):

global decoded_packets

decoded_packets = []

sniff(offline=pcap_file, session=TCPSession, prn=packet_handler)

return decoded_packets

)")Within the hcap object lies the entire namespace acquired by the Python operations, including our two functions.

This means I can do something like this:

xdf <- hcap$read_pcap(path.expand("~/Data/some-really-awfully-named.pcap"))

xdf <- data.table::rbindlist(xdf, fill=TRUE)

str(xdf)

## Classes ‘data.table’ and 'data.frame': 749 obs. of 35 variables:

## $ ip : chr "90.169.110.161" "18.119.179.222" "45.128.232.140" "93.174.95.106" ...

## $ Accept : chr "text/plain,text/html" NA NA "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8" ...

## $ Method : chr "GET" "GET" "CONNECT" "GET" ...

## $ Path : chr "/" "/.git/credentials" "google.com:443" "/" ...

## $ Http_Version : chr "HTTP/1.0" "HTTP/1.1" "HTTP/1.1" "HTTP/1.1" ...

## $ Accept_Charset : chr NA "utf-8" NA NA ...

## $ Accept_Encoding : chr NA "gzip" NA "identity" ...

## $ Connection : chr NA "close" NA NA ...

## …and, then start the triage.

FIN

Sure, one could just run these PCAPs through Zeek, tshark, Suricata, and other tools (and we do!). But, sometimes it’s faster and more convenient to “roll your own” workflow, especially if you only want certain bits from the packets and don’t want to rely on manual pages or ChatGPT for CLI syntax hints.

If you’re in our Sensors Early Access Program give the above ago, or share how you work with the PCAPs in our Community Slack channel.